Kubernetes Basics

What is Kubernetes?

Ans: An Open-source container orchestration tool by Google is used for multi-environment, multi-container deployment.

## Kubernetes is an open-source tool that was developed by Google. It has two entities: the master and the node. Master has the API server, which handles all the connections to the nodes. The API server can be accessed by Kubectl. Kubectl makes sure we can contact the API server, and the API server can contact nodes. API servers have multiple services backing them up. Like controller manager, etcd, scheduler.

Why do we use Kubernetes?

Ans: For production-ready deployment of microservices and small apps Having fewer failures and downtime, backups, and restores.

Kubernetes Architecture

Cloud-based k8s services

GKE (Google Kubernetes Services)

AKS (Azure Kubernetes services)

Amazon EKS (Amazon Elastic Kubernetes Service)

Feature of Kubernetes

Orchestration (clustering of any number of containers running on a different network)

Autoscaling (Vertical & Horizontal)

Auto Healing

Load Balancing

Platform independent (Cloud /Virtual/Physical)

Fault Tolerance (Node/POD/Failure)

Rollback (Going back to the previous version)

Health monitoring of containers

Batch Execution (One-Time, Sequential, Parallel)

Working with Kubernetes

We create a Manifest (.YAML) file

Apply those to the cluster (master) to bring it into the desired state.

Pod runs on a node, which is controlled by the master.

Components of Control Plane

Kube-API-server

This API-server interacts directly with the user ( we apply a.YAML or.json manifest to kube-API-server)

This Kube API server is meant to scale automatically as per the load

Kube-API-server is the front end of the control plane

etcd

Stores metadata and the status of the cluster.

Etcd is a consistent and highly available store (key-value-store)

Source of touch for cluster state (information about the state of the cluster)

etcd has the following features:

Fully Replicated: The entire state is available on every node in the cluster.

Secure: Implements automatic TLS with optional client-certificate authentication

Fast: Benchmarked at 10,000 writes per second.

Kubernetes-schedular (action)

When users request the creation & management of pods, Kube-Scheduler is going to take action on these requests.

Handles Pod creation and Management

Kube-scheduler matches or assigns any node to create and run pods.

A scheduler watches for newly created pods that have no node assigned. For every pod that the scheduler discovers, the scheduler becomes responsible for finding the best nodes for that pod to run.

The scheduler gets the information for hardware configuration from configuration files and schedules the pods on nodes accordingly.

Controller-Manager

Make sure the actual state of the cluster matches the desired state.

Two possible choices for controller manager:

If K8s is on the cloud, then it will be a cloud controller manager

If k8s is on non-cloud, then it will be kube-controller-manager

Components on the master that runs the

controller:

Node Controller: For checking the cloud provider to determine if a node has been detected in the cloud after it stops responding

Route-Controller: Responsible for setting up a network, and routes on your cloud.

Service-Controller: Responsible for load balancers on your cloud against services of type load balancer.

Volume-Controller: For creating, attaching, and mounting volumes and interacting with the cloud provider to orchestrate volume.

Kubelet

The agent running on the node

Listen to Kubernetes master (Podd creation request)

Use port 10255

Send success/failure reports to master.

Container Engine

Works with kubelet

Pulling images

Start/Stop containers

Exposing containers on ports specified in the manifest

Kube-proxy

Assign IP to each pod

It is required to assign IP addresses to pods (dynamic)

Kube-proxy runs on each node, and this makes sure that each pod will get its unique IP address.

These three components collectively consist of nodes.

POD

The smallest unit in Kubernetes

POD is a group of one or more containers that are deployed on the same host.

A cluster is a group of nodes.

A cluster has at least one worker node and a master node.

In Kubernetes, the control unit is the POD, not the containers.

Consist of one or more tightly coupled containers

POD runs on a node, which is controlled by the master.

Kubernetes only knows about PODS

Can not start containers without a POD.

One POD usually contains one cluster

Multi Cluster PODs

Share access to memory space

Connect using localhost <container-port>

Share access to the same volume

Containers within POD are deployed in an all-or-nothing manner.

The entire POD is hosted on the same node

There is no auto-healing or scaling by default.

Minikube install

Minikube is single node kubernetes cluster.

Minikube is a lightweight Kubernetes implementation that creates a VM on your local machine and deploys a simple cluster containing only one node. Minikube is available for Linux, macOS, and Windows systems.

Prerequisites

2 CPUs or more

2GB of free memory

20GB of free disk space

Internet connection

Container or virtual machine manager, such as: Docker, QEMU, Hyperkit, Hyper-V, KVM, Parallels, Podman, VirtualBox, or VMware Fusion/Workstation

Install Docker:

sudo apt-get install docker.io

Install Minikube

Run the following commands to download the credentials for Minikube.

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

Run the following command to start Minikube:

minikube start

Make driver is Docker:

minikube start --driver=docker

To make Docker the default driver:

minikube config set driver docker

Interact with your cluster

If you already have Kubectl installed, you can now use it to access your shiny new cluster:

kubectl get po -A

Go to the inside of Minikube by following the command:

minikube ssh

Install Kubectl by following the command:

#inside of Cluster

sudo snap install kubectl

#outside of Cluster

sudo apt-get install kubectl

Note: If you find any errors about the classic request, then run the following command:

#Inside the Cluster

sudo snap inatall kubectl –classic

#Outside of the Cluster

sudo apt-get inatall kubectl –classic

Alternatively, minikube can download the appropriate version of kubectl, and you should be able to use it like this:

minikube kubectl -- get po -A

#Install kubectl in your CLI

curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl \

&& sudo install kubectl /usr/local/bin && rm kubectl

Namespace:

In Kubernetes, namespaces provide a mechanism for isolating groups of resources within a single cluster.

Default namespace

Kube-system

Kube-node-lease

Kube-public

To see Kube-system pods, run the following command:

kubectl get pods --namespace=kube-system

Service Proxy:

Every pod has an IP address, and every node also has an IP address, If you need to access them, you need kube-proxy.

Kubelet:

Kubelet will talk with the API server for pod health and node access,

To see the namespaces, run the following command:

kubectl get namespaces

To create a namespace, run the following command:

kubectl create namespace <name>

Note: Replace <name> with your dedicated name.

Inside a pod, we will run the container. No build command will work here. So, inside the pod, we will insert a Docker image.

- Take image from Docker Hub

To create a Pod in Kubernetes, you need to run something known as .YAML file

(Kubernetes pod> search in Google)

Write a configuration file by following the .yaml file:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

Note:

apiVersion: v1 > This specifies the version of the Kubernetes API being used. In my case, it's v1, which refers to the core Kubernetes API version.

Kind: pod > This specifies the type of Kubernetes resource you're creating. Here, it's a Pod, so the value is Pod.

metadata: This section contains metadata about the Pod, such as its name and namespace.

name> This is the name of the Pod, which in this case is set to django-todo-pod

namespace> This is the namespace in which the Pod will be created. Namespaces are a way to organize resources within a Kubernetes cluster.

spec> This is where you define the specification of the Pod, including the containers that will run inside it.

containers> This is an array that defines the containers to be run inside the Pod. In your case, there's one container.

- name> This is the name of the container, set to django-todo-ctr.

(- ) is contained as a list of an array. We can create more than one container inside a pod.

image> This specifies the Docker image to be used for the container. The image

ports> This section defines the network ports that the container will listen on.

containerPort> This is the port number that the container's application is listening on. In your case, the application inside the container will listen on port 8001.

Now We will create POD with the following command:

kubectl apply -f <file name>

Note: Replace the file name with your saved.yaml file.

To check whether the pod is created or not, follow the command:

kubectl get pods --namespace= <name>

Note: Replace <name> with your given namespace name

Note: Now we can say that these pods are running inside Minikube Kubernetes.

Now We will go inside the Minikube and see the Docker container and images.

minikube ssh

Now, We will see Docker images and Docker containers here. Use Docker commands.

Note: Up until now, what I have done has happened inside Kubernetes.

To find the IP address of this pod, follow the command:

kubectl get po --namespace=<name> -o wide

Note: Replace the <name> with your namespace name.

We can see an IP address here. We can not use this IP to expose it because this is a Minikube IP address.

Delete pods:

kubectl delete -f <.yaml>

Deployment of our application.

Important note: If somehow the Docker container crashes or stops, then it has no power to start itself. For this reason, we use Kubernetes. But,

If we delete our Kubernetes pods, then the application will be gone. That means we can not use pods at a production level. In this case, we need deployment.

Deployment:

To support pods, we need deployment.

Deployment is basically a configuration or rule for your application, pod, or container. Deployment creates a contract between the API server and nodes. Create a replica with specific ports.

There would be many applications, but there is only one deployment file for one application.

Note: Go to google search for Sample of deployment Kubernetes and select the official page.

Link: https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

Note: Create a deployment.yaml file by following the configurations.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

namespace: shawon

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

The code snippet you provided is a YAML configuration file for creating a Kubernetes Deployment. Let's break down each section and understand its purpose:

apiVersion: This specifies the version of the Kubernetes API being used. In this case, it'sapps/v1, which corresponds to the version that deals with higher-level abstractions like Deployments.kind: This specifies the type of Kubernetes resource you're creating. Here, it's a Deployment, so the value isDeployment. (Auto healing, Auto scalling)metadata: This section contains metadata about the Deployment, such as its name and labels.name: This is the name of the Deployment, set tonginx-deployment.labels: These are key-value pairs that are used to identify and categorize resources. Here, the labelapp: nginxis assigned to the Deployment.

spec: This is where you define the specification of the Deployment, including the desired number of replicas, selector, and template for creating Pods.replicas: This specifies the desired number of replica Pods that should be created and maintained. In this case, it's set to3, so the Deployment will ensure that three identical Pods are running.selector: This is used to select which Pods the Deployment should manage. It uses labels to make the selection.matchLabels: This specifies that the Deployment should manage Pods with the labelapp: nginx.

template: This is the template used to create the Pods managed by the Deployment.metadata: This section contains labels that will be applied to the Pods created from this template. In this case, the labelapp: nginxis applied.spec: This section defines the specification for the Pods created by the Deployment.containers: This is an array that defines the containers to be run inside the Pods.name: This is the name of the container, set tonginx.image: This specifies the Docker image to be used for the container. The imagenginx:1.14.2will be pulled from a container registry.ports: This section defines the network ports that the container will listen on.containerPort: This is the port number that the container's application (nginx in this case) is listening on. The value is80, which is the default port for HTTP.

In summary, this YAML configuration defines a Kubernetes Deployment named nginx-deployment that manages three replica Pods, each running an nginx container based on the nginx:1.14.2 image. The Pods are labeled with app: nginx and listen on port 80 for HTTP traffic.

Run the following command:

kubectl apply -f deployment.yaml

Note: Here I deployed the NGINX server.

To check how many pods are created with this command:

kubectl get pods

Note: We will see three pods running. It will run under the default namespace. If we define a namespace in our deployment.yaml file, then we will see that our nginx server is running under that given namespace.

AutoHealling:

Now, if we delete any pods from that namespace or default namespace, It will delete them but automatically create another one.

kubectl delete pods <pods_name>

Note. After deleting the pods, see the pods, and we will see another new pod created and running successfully.

kubectl get pods -o wide -n <namesapaces>

Note: Once we run the above command, we will see every pod has assigned a different IP. This is not a way to deploy any application. Now we will assign a single IP for every node and for this, we need to create services.

Service

To assign IPs to every pod, we will contract with new services.

First, go to google and search for service in Kubernetes.

Link: https://kubernetes.io/docs/concepts/services-networking/service/

Types of Services:

Cluster IP : Exposes the Service on a cluster-internal IP. Choosing this value makes the Service only reachable from within the cluster. This is the default that is used if you don't explicitly specify a

typefor a Service. You can expose the Service to the public internet using an Ingress or a Gateway.NodePort: Exposes the Service on each Node's IP at a static port (the

NodePort). To make the node port available, Kubernetes sets up a cluster IP address, the same as if you had requested a Service oftype: ClusterIP.LoadBalancer: Exposes the Service externally using an external load balancer. Kubernetes does not directly offer a load-balancing component; you must provide one, or you can integrate your Kubernetes cluster with a cloud provider.

ExternalName: Maps the Service to the contents of the

externalNamefield (for example, to the hostnameapi.foo.bar.example). The mapping configures your cluster's DNS server to return aCNAMErecord with that external hostname value. No proxying of any kind is set up.

Now check the IP:

kubectl get pods -o wide -n shawon

Example: Output

Note: We can see different IP. Now we will run the Cluster IP service by following the script.

Load Balancer service

#vim service.yaml

apiVersion: v1

kind: Service

metadata:

name: my-service

namespace: shawon

spec:

type: LoadBalancer

selector:

app: nginx

ports:

- port: 80

targetPort: 80

protocol: TCP

Now Run the following command to apply the service.yaml file.

kubectl apply -f service.yaml

kubectl get svc -n shawon

Example output:

Note: It means we assigned cluster IP.

Now we want to deploy the application. In this case, run the following command:

minikube service my-service -n shawon --url

Note: Replace <my-service> according to your service name and your specific namespaces.

Once you get the URL run the following command:

curl -L http://192.168.49.2:32469

Debugging:

minikube service my-srvice -n shawon

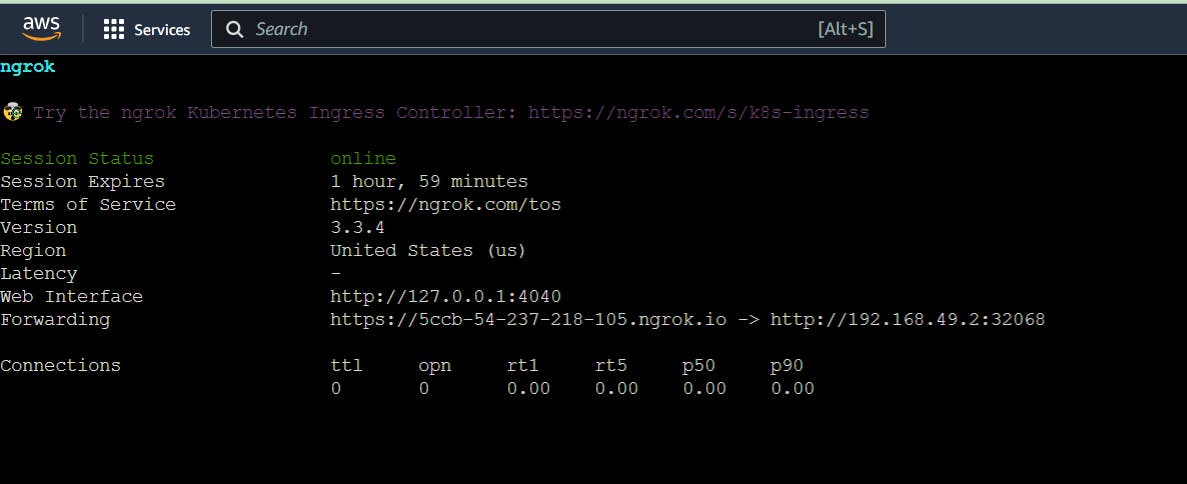

Now Run your Local K8s cluster in the browser. For this, we will use a tunnel(NGROK).

What we have done yet is based on my local. Now we need to give access to an Internet browser. For this, we will use ngrok.

ngrok: ngrok is a cross-platform application that enables developers to expose a local development server to the Internet with minimal effort. The software makes your locally-hosted web server appear to be hosted on a subdomain of ngrok.com, meaning that no public IP or domain name on the local machine is needed. Similar functionality can be achieved with Reverse SSH Tunneling, but this requires more setup as well as hosting of your own remote server.

Go to Google search ngrok and sign up it free.

Right-click the Linux option and copy the link address.

Go to your local server's command line and run the following command :

wget <link>

#wget https://bin.equinox.io/c/bNyj1mQVY4c/ngrok-v3-stable-linux-amd64.tgz

- Now we will see a zip file downloaded to our local server.

For example, ngrok-v3-stable-linux-amd64.tgz

- Unzip the tgz file by following the command.

tar -xvzf <file>

#example:

#tar -xvzf ngrok-v3-stable-linux-amd64.tgz

Note:x = for extract. v = for verbose. z = for gnuzip. f = for file

Authenticate yourself with ngrok: Go to the ngrok page and you will see under option 2 <Connect your account>. Now copy the command and run your local machine.

Now find your URL copy this (used in curl command) and make a command for ngrok like:

./ngrok http 192.168.49.2:32068

Note: If it appears as a 6022 error, then run the following command.

ngrok http 192.168.49.2:32068

The output will look like this:

Note: It means ngrok makes a tunnel. Now copy the Forwarding link, paste it into a tab, and run it.

Important Note: This is set up for testing purposes. In the next steps, we will see how it works at the production level.

Ingress controller.

Create a ingress file:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: minimal-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

namespace: shawon

spec:

ingressClassName: nginx-example

rules:

- http:

paths:

- path: /testpath

pathType: Prefix

backend:

service:

name: my-service

port:

number: 80

Run the following command to apply the ingress file:

kubectl apply -f ingress.yaml

Now run the following command to get access:

sudo vim /etc/hosts

curl -L 192.168.49.2

Next steps for extra learning.

Manage your cluster

Pause Kubernetes without impacting deployed applications:

minikube pause

Unpause a paused instance:

minikube unpause

Stop the cluster:

minikube stop

Change the default memory limit (requires a restart):

minikube config set memory 9001

Browse the catalog of easily installed Kubernetes services:

minikube addons list

Create a second cluster running an older Kubernetes release:

minikube start -p aged --kubernetes-version=v1.16.1

Delete all of the minikube clusters:

minikube delete --all